Table of Contents

Introduction

Apache Airflow is a powerful orchestration tool primarily used by data engineers. However, its capabilities can significantly benefit DevOps engineers as well. By using Airflow, DevOps engineers can automate complex workflows and automate various infrastructure use cases.

Airflow as a platform is useful for developing, scheduling and monitoring batch-oriented workflows. Airflow lets you define your tasks as a Directed Acyclic Graph (DAG). DAG allows you to clearly define dependencies between tasks.

Here are some examples on how you can leverage Airflow as a DevOps engineer.

1: Simplified Self-Provisioning of Infrastructure

DevOps engineers can create Directed Acyclic Graphs (DAGs) in Airflow to automate the self-provisioning of infrastructure, such as EC2 instances. The AWS Console requires configuring numerous parameters, which can cause errors.

With Airflow, DevOps engineers can prefill essential parameters like VPC, OS image, and security groups. Developers then only need to pass instance-specific details, such as the instance name. This method ensures all instances follow company standards without granting developers direct AWS account access. This approach simplifies provisioning process and also ensures consistency and security across all instances.

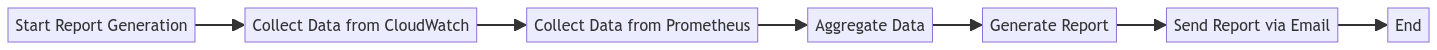

2: Detailed System Reports

Airflow enables the automation of periodic data collection from multiple sources, such as CloudWatch, Prometheus, and other monitoring systems. Engineers can write DAGs that aggregate this data to generate detailed daily reports. This process ensures that the overall system health is monitored consistently, providing valuable updates and early warnings about potential issues. Regular reporting helps in identifying trends, detecting anomalies, and making informed decisions about system improvements and maintenance. By automating this task, DevOps teams can save time and focus on more critical issues.

3: Continuous Compliance and Security

Security is a very important aspect of any infrastructure. DevOps engineers can configure security checks as DAGs based on their company's requirements. These DAGs can be scheduled to run periodically or on demand, ensuring continuous compliance and security. Automated security checks help in identifying vulnerabilities and misconfigurations promptly, thereby safeguarding the infrastructure against potential threats. Regular automated checks also ensure that security policies are consistently enforced, reducing the risk of human error and enhancing overall security posture.

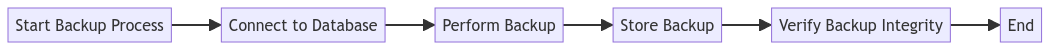

4: Automated Backups

With Airflow, DevOps engineers can automate database backups by creating DAGs that run backup tasks at specified intervals. This automation guarantees regular backups without manual intervention, reducing the risk of data loss and ensuring that critical data is always secure and retrievable.

Once the DAG is built for managing backups of a particular database type, the same can be reused for all new instances by other members in the team.

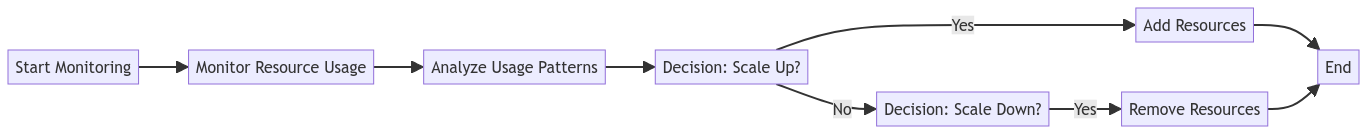

5: Dynamic Scaling Based on Demand

Airflow can be used to implement advanced autoscaling of resources in response to varying workloads. With Airflow DAGs you can overcome limitations of existing autoscaling solutions and you can take into account various parameters for your autoscaling decisions by writing code in Python.

By creating DAGs that monitor usage patterns and resource demands, DevOps engineers can implement auto-scaling policies that add or remove resources as needed.

6: Consistent Environment Setup

Managing configuration changes across multiple environments can be challenging. Airflow allows DevOps engineers to automate the deployment and configuration of software across environments.

By defining configurations in DAGs, engineers can ensure consistency and reduce the risk of configuration drift, leading to more stable and predictable environments. This automation helps in maintaining uniform settings across development, testing, and production environments.

7: Rapid and Coordinated Response

When incidents occur, timely response is critical. Airflow can be employed to automate incident response workflows. By creating DAGs that trigger specific actions based on alerts from monitoring systems, DevOps engineers can ensure rapid and coordinated responses to incidents, minimizing downtime and impact on users. Automated incident response can include steps such as notifying relevant teams, isolating affected systems, and initiating recovery procedures, thereby streamlining the resolution process.

Once the incident response logic is written as a DAG, it can be run by any team member manually or based a trigger.

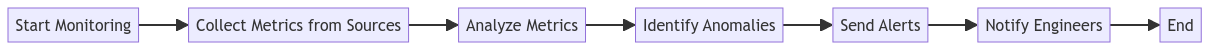

8: Proactive System Monitoring

Airflow can increase monitoring capabilities by automating the aggregation and analysis of metrics from various sources. DevOps engineers can create DAGs to monitor infrastructure health continuously and set up alerting mechanisms for anomalies. This proactive monitoring approach helps in identifying and resolving issues before they escalate, ensuring system stability and performance. Automated alerts can notify engineers of potential problems, allowing for swift intervention and minimizing disruptions.

Conclusion

Apache Airflow offers a powerful platform for DevOps engineers to automate, manage, and improve various operational workflows. By using Airflow's capabilities, DevOps teams can achieve greater efficiency, reliability, and security in their infrastructure management and operations.

Wanna know how you can Automate PostgreSQL Backups to S3 using Apache Airflow? checkout our detailed article: https://www.kubeblogs.com/automate-postgresql-backups-to-s3/

At kubeNine we have built solutions to automate a lot of these use cases using DevOps. Feel free to reach out to us for more information!