Table of Contents

Non-production workloads running on Kubernetes need not be up 24/7. In order to cut cost we can easily shut down a lot of them during non-work hours or on weekends. This small change can add to significant cost saving in large environments.

Snorlax helps you do exactly that. By automatically putting your workloads to sleep when they aren’t needed—and waking them when they are.

In this blog, we’ll walk through how Snorlax works, what limitations to watch for, and how we tested it in our own cluster to validate its impact.

The Problem: Always-On Clusters Waste Resources

Most dev and test workloads are idle overnight and on weekends, but still consume compute. Shutting them down during these off-hours can significantly reduce cost and overhead—yet few teams do it consistently or don’t do it at all.

What Snorlax Does

Snorlax is a Kubernetes operator that automates workload scaling on a defined schedule. It scales Deployments to zero at sleep time, and restores them at wake time.

A SleepSchedule CRD defines which deployments to manage, when to sleep and wake, and which ingresses to reroute during downtime.

apiVersion: snorlax.moonbeam.nyc/v1beta1

kind: SleepSchedule

metadata:

name: qa-env-schedule

namespace: qa

spec:

wakeTime: "08:00am"

sleepTime: "10:00pm"

timezone: "Asia/Kolkata"

deployments:

- name: frontend

- name: backend

- name: redis

ingresses:

- name: qa-app-ingress

requires:

- deployment:

name: frontendKey features:

- Ingress controller awareness: Automatically detects and configures supported ingress controllers (e.g., NGINX, ALB).

- Wake-on-request: Redirects traffic to a wake-up page and scales services on demand.

- ELB health check handling: Ignores health checks to avoid unintentional wake-ups.

- Wake persistence: Keeps services awake until the next scheduled sleep if accessed during off-hours.

Real-World Demo: Snorlax in Action

To test Snorlax and see its capabilities firsthand, we set up a simple demo with an Nginx deployment and observed how it behaved. Here’s what we did:

1. Setting Up Our Test Environment

First, we installed Snorlax using Helm:

Add the Moonbeam Helm repository

helm repo add moonbeam https://moonbeam-nyc.github.io/helm-charts

helm repo updateInstall Snorlax in our namespace

helm repo add moonbeam https://moonbeam-nyc.github.io/helm-charts

helm repo update

2. Creating a Test Deployment

We created a simple Nginx deployment with 2 replicas:

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-demo

namespace: huzaif

spec:

replicas: 2

selector:

matchLabels:

app: nginx-demo

template:

metadata:

labels:

app: nginx-demo

spec:

containers:

- name: nginx

image: nginx:1.213. Applying a Sleep Schedule

Next, we created a SleepSchedule to manage our Nginx deployment:

apiVersion: snorlax.moonbeam.nyc/v1beta1

kind: SleepSchedule

metadata:

namespace: huzaif

name: nginx-demo-sleep

spec:

wakeTime: '8:00am'

sleepTime: '12:30pm'

deployments:

- name: nginx-demo

timezone: 'Asia/Kolkata'

4. What We Observed

Initially, our Nginx deployment was running with 2 replicas as expected:

$ kubectl get deployment nginx-demo -n huzaif

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-demo 2/2 2 2 2m31s

When 12:30 PM IST arrived, Snorlax automatically scaled our deployment to zero:

$ kubectl get deployment nginx-demo -n huzaif

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-demo 0/0 0 0 14mWe even tried to manually scale it back up:

$ kubectl scale deployment nginx-demo -n huzaif --replicas=2

deployment.apps/nginx-demo scaledBut Snorlax immediately detected this and maintained the sleep state:

$ kubectl get deployment nginx-demo -n huzaif

NAME READY UP-TO-DATE AVAILABLE AGE

nginx-demo 0/0 0 0 14mWithin minutes of setup, Snorlax correctly scaled our deployment down at the scheduled time and kept it asleep, even resisting manual replica scaling. This shows that Snorlax is reliable for enforcing environment sleep policies without manual oversight.

Snorlax Alone Won’t Save You Money

Snorlax stops the pods—but not the nodes.

Unless you’re using a Cluster Autoscaler (like Karpenter or the native Kubernetes autoscaler), idle infrastructure remains provisioned and costs stay the same.

To benefit financially, pair Snorlax with autoscaling so that unused nodes can be automatically deprovisioned when workloads sleep.

How to Maximize Savings with Snorlax

Use Cluster Autoscaler or Karpenter

Enables node-level scale-down for real savings. When Snorlax scales deployments to zero, the cluster autoscaler can detect the idle nodes and remove them, reducing your infrastructure footprint.

Avoid fixed/dedicated nodes

Don’t pin workloads to specific hardware with node affinities or taints that might prevent the autoscaler from removing nodes.

Sleep groups of workloads together

Frees up entire nodes for deprovisioning. If you sleep only a few pods on a node, the node will still run. Group related services on the same sleep schedule to empty entire nodes.

Leverage ingress wake-up

Users can still access sleeping environments if needed. The wake-on-request feature means your services are still accessible, they just take a minute to start up.

Let services stay awake until next cycle

Snorlax ensures that once a service is woken (either scheduled or through ingress access), it stays awake until the next sleep cycle. This prevents constant scaling events and improves stability.

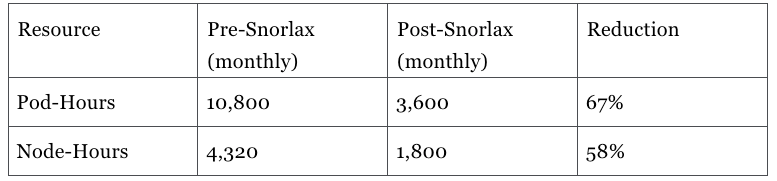

Quantifying the Savings

When properly implemented with cluster autoscaling, the resource savings can be substantial:

For a typical non-production environment with 15 deployments:

This translates to approximately 50-60% cost reduction for non-production environments.

Additional Benefits of Scheduled Sleep

Beyond cost savings, there are several other benefits:

- Reduced attack surface: Fewer running services means a smaller target for potential vulnerabilities

- Energy savings: Less compute means less power consumption and a greener footprint

- Clear usage patterns: Sleep/wake cycles create clear boundaries for when environments should be used

- Automatic enforcement: No more relying on manual processes to scale down environments

Should You Use Snorlax?

Recommended if:

- You run multiple non-prod workloads

- You already use (or plan to use) autoscaling

- Your workloads support cold starts and scale-to-zero

Not ideal if:

- Your infrastructure is static

- Apps require 24/7 instant availability

- Cold starts introduce unacceptable latency

Final Thoughts

Snorlax helps automate the often-overlooked practice of shutting down unused environments. It’s lightweight, Kubernetes-native, and easy to integrate. The ability to wake deployments on-demand through ingress also means you don’t lose access to your environments—they just take a minute to start up when you need them.

But to get real cost savings, you need to combine it with autoscaling. That’s where the real value can be found.

Looking for more ways to optimize cost of your Kubernetes environments? KubeNine can help. Reach out to us on contact@kubenine.com!