Table of Contents

Introduction

Is it even possible to exec into a running container in ECS? It definitely is - but it’s not straightforward to setup.

ECS Exec lets you connect to your containers on demand using the AWS CLI, giving you a way to inspect, debug, and fix issues inside your running services—without exposing them to external access.

In this guide, we’ll walk through setting up ECS Exec, checking configurations, handling common issues, and automating the whole process using Terraform. Whether you're troubleshooting a failed task or looking to simplify access for future deployments, this will help you get there.

Prerequisites

Install Required Tools

- AWS CLI

aws --versionThe AWS CLI version should be 2.1.33 or later.

- Session Manager Plugin (Required for ECS Exec)

session-manager-plugin --versionIf it is not installed, download it from AWS Documentation.

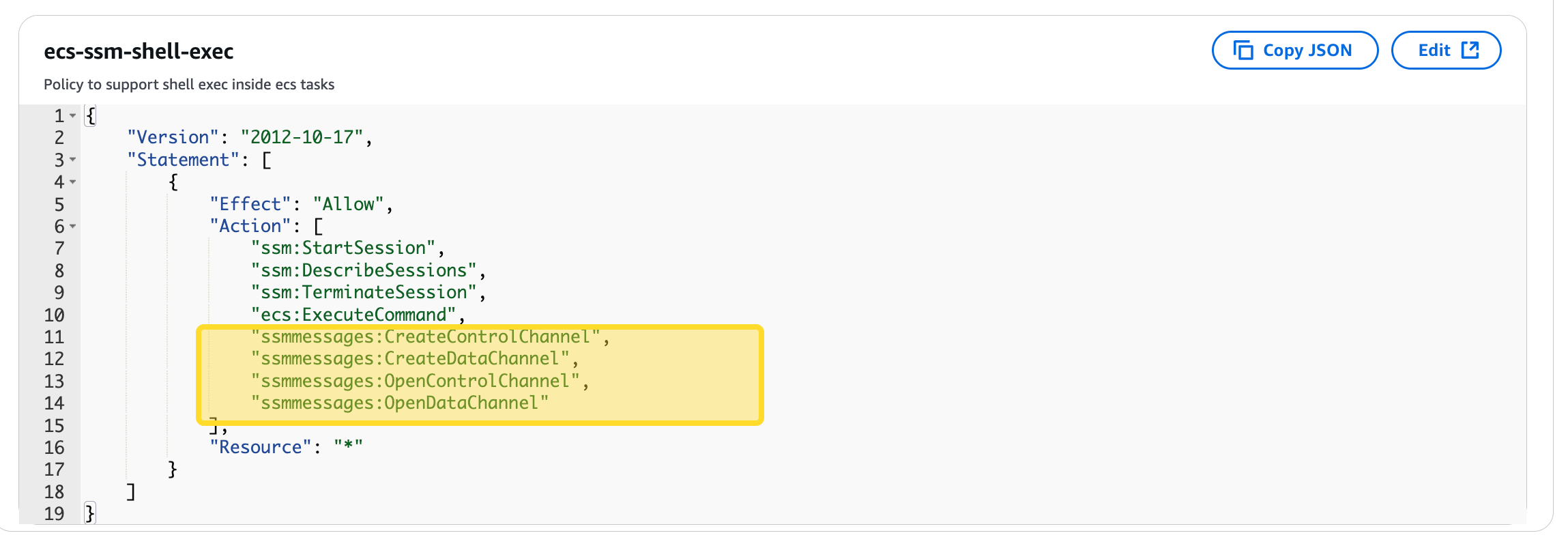

IAM Permissions

The ECS Task Execution Role must have these permissions:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"ssm:StartSession",

"ssm:DescribeSessions",

"ssm:TerminateSession",

"ecs:ExecuteCommand",

"ssmmessages:CreateControlChannel",

"ssmmessages:CreateDataChannel",

"ssmmessages:OpenControlChannel",

"ssmmessages:OpenDataChannel"

],

"Resource": "*"

}

]

}Checking if ECS Exec is Enabled for a Service

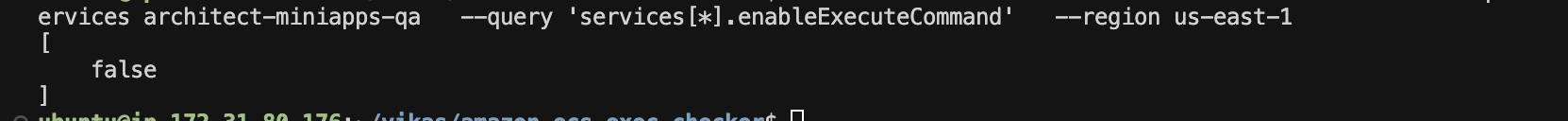

Run the following command to check if ECS Exec is enabled:

aws ecs describe-services \

--cluster <your-cluster> \

--services <your-service> \

--query 'services[*].enableExecuteCommand' \

--region <your-region>

If the output is true, ECS Exec is enabled. If false, it must be enabled as shown below.

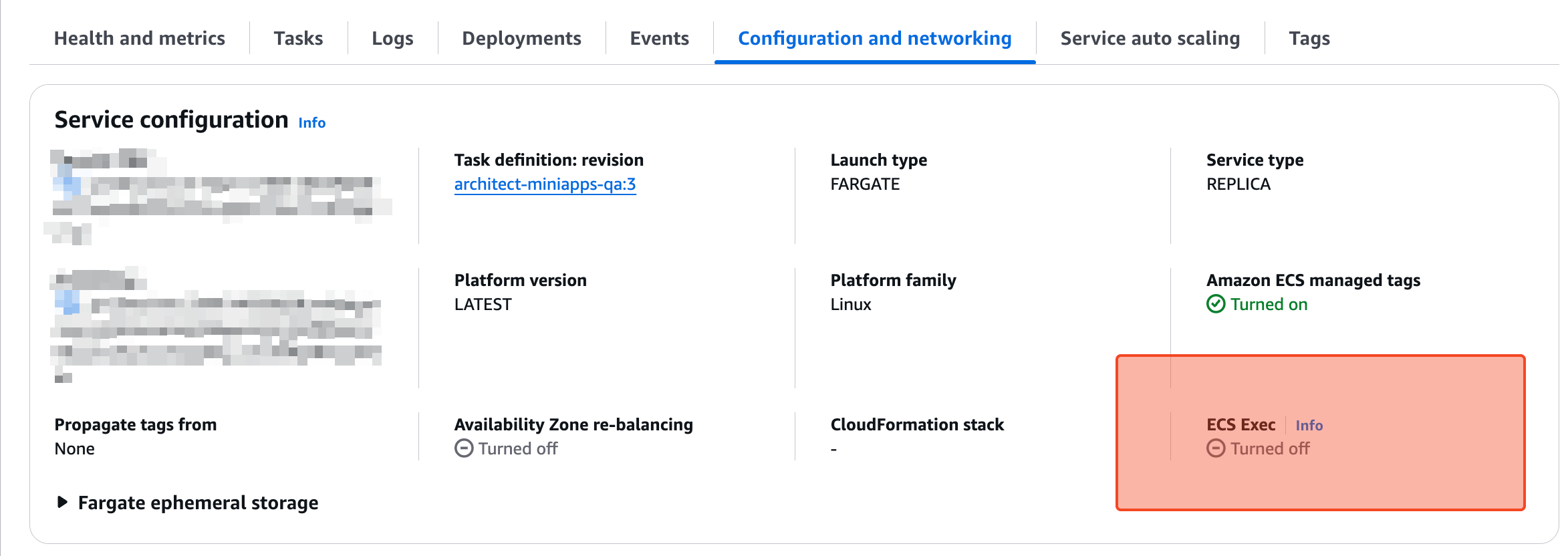

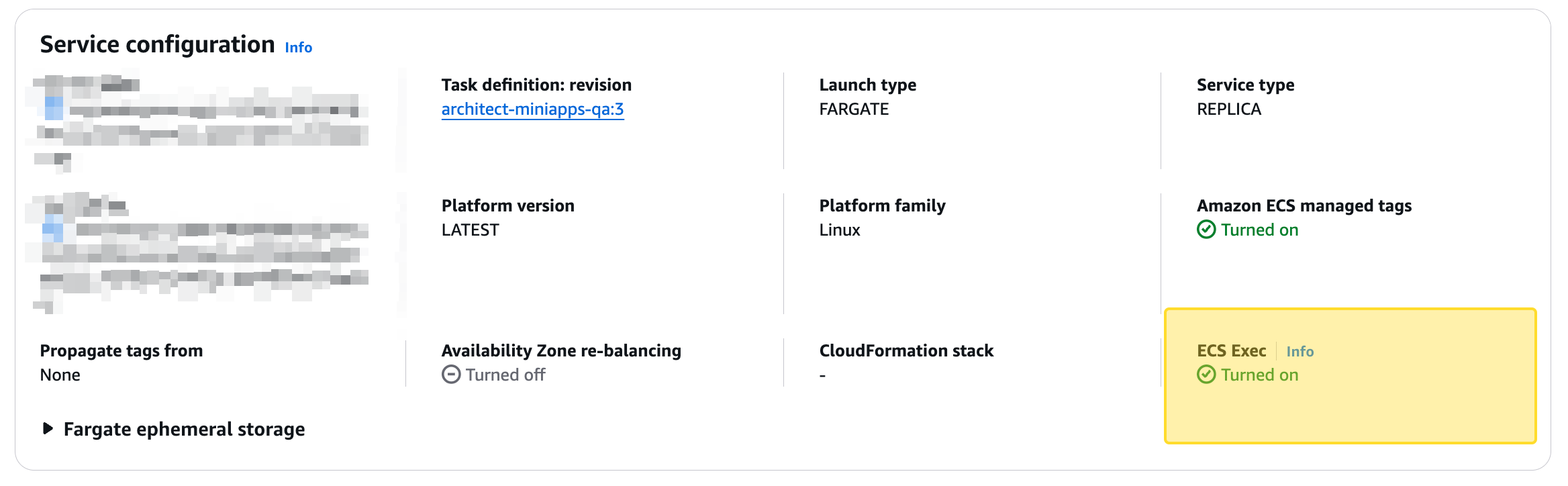

Checking ECS Exec via AWS Console

You can also check this in the AWS Console:

- Open the Amazon ECS Console.

- Navigate to Clusters and select your cluster.

- Click on the Services tab and select your service.

- Under the Networking section, look for the ECS Exec status.

If ECS Exec is not enabled, follow the next steps to enable it.

If ECS Exec is not enabled, follow the next steps to enable it.

Step-by-Step: Enabling and Using ECS Exec in AWS Fargate

Step 1: Enable ECS Exec for the Service

Run the following command to enable ECS Exec on your ECS service:

aws ecs update-service \

--cluster <your-cluster> \

--service <your-service> \

--enable-execute-command \

--region <your-region>Step 2: Verify ECS Exec is Enabled at the Service Level

After running the command, check if ECS Exec is enabled by running:

Go to your service check networking you can see ecs exec service level as well

aws ecs describe-services \

--cluster <your-cluster> \

--services <your-service> \

--query 'services[*].enableExecuteCommand' \

--region <your-region>If the output is true, ECS Exec is enabled at the service level.

Alternative: Check in the AWS Console (UI)

- Open the Amazon ECS Console.

- Go to Clusters and select your cluster.

- Click on the Services tab and select your service.

- Under Networking, look for the ECS Exec status.

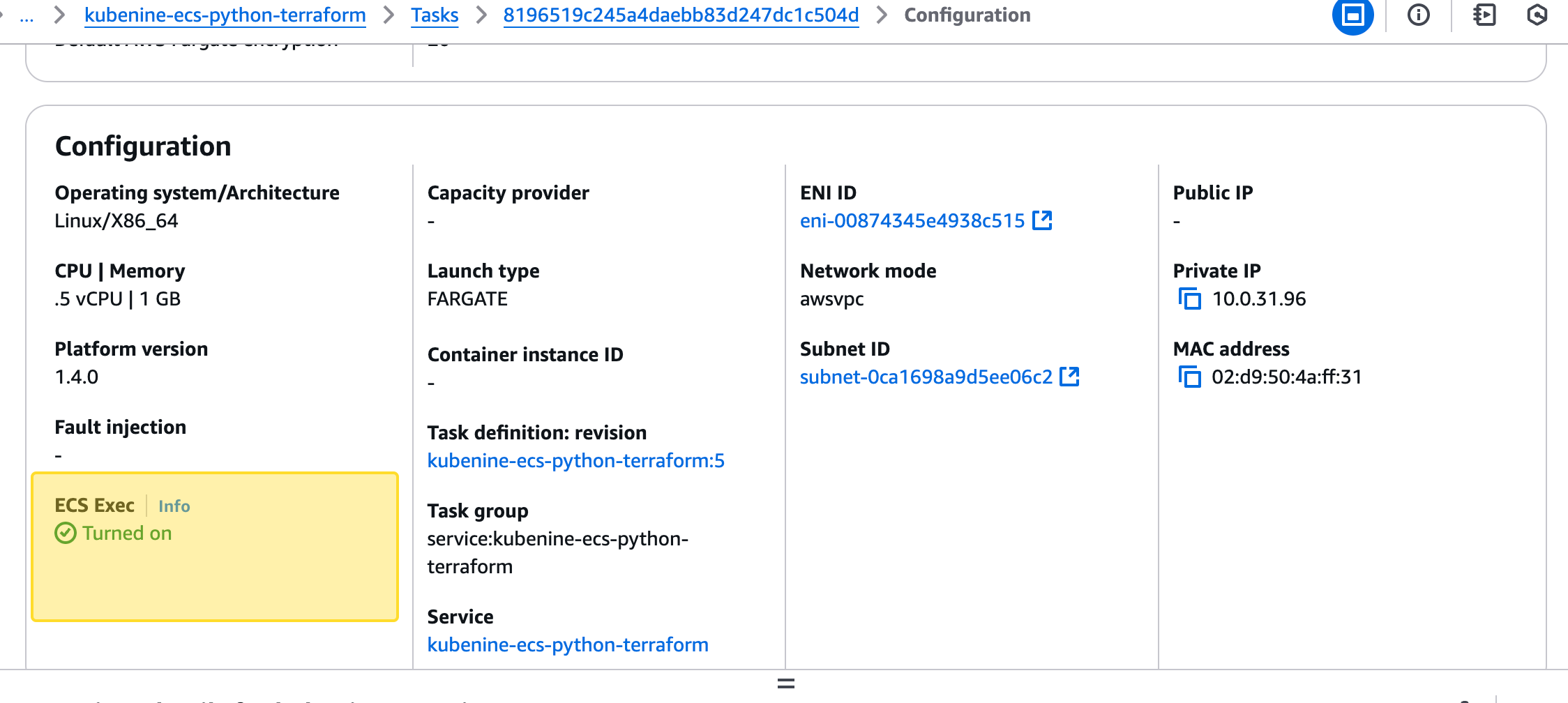

Step 3: Verify ECS Exec at the Task Level

Now, check if ECS Exec is enabled at the task level:

- Open the Amazon ECS Console.

- Go to Clusters and select your cluster.

- Click on the Tasks tab and choose a running task.

- Look for the Execute Command option to confirm ECS Exec is enabled.

Alternatively, use the AWS CLI:

aws ecs describe-tasks \

--cluster <your-cluster> \

--tasks <your-task-id> \

--query 'tasks[].enableExecuteCommand' \

--region <your-region>Step 4: Verify if the ECS Exec Agent is Running

To confirm that the ECS Exec agent is running inside the task, run:

aws ecs describe-tasks \

--cluster <your-cluster> \

--tasks <your-task-id> \

--query 'tasks[].containers[].managedAgents'If the agent is running, you will see an output like:

[

{

"name": "ExecuteCommandAgent",

"status": "RUNNING"

}

]

If it is not running, restart the ECS task to apply changes:

aws ecs update-service \

--cluster <your-cluster> \

--service <your-service> \

--desired-count 1 \

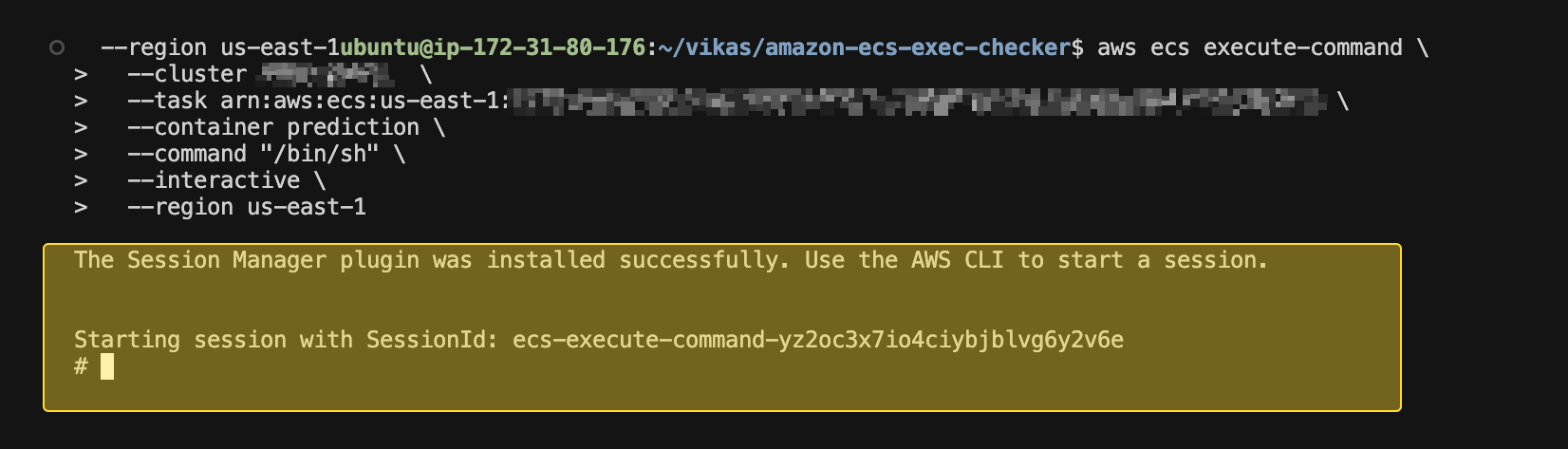

--region <your-region>Step 5: Access the Container Using ECS Exec

Once ECS Exec is enabled and the agent is running, you can connect to the container using:

aws ecs execute-command \

--cluster <your-cluster> \

--task <your-task-id> \

--container <your-container-name> \

--command "/bin/sh" \

--interactive \

--region <your-region>If everything is set up correctly, you should now have access to the container.

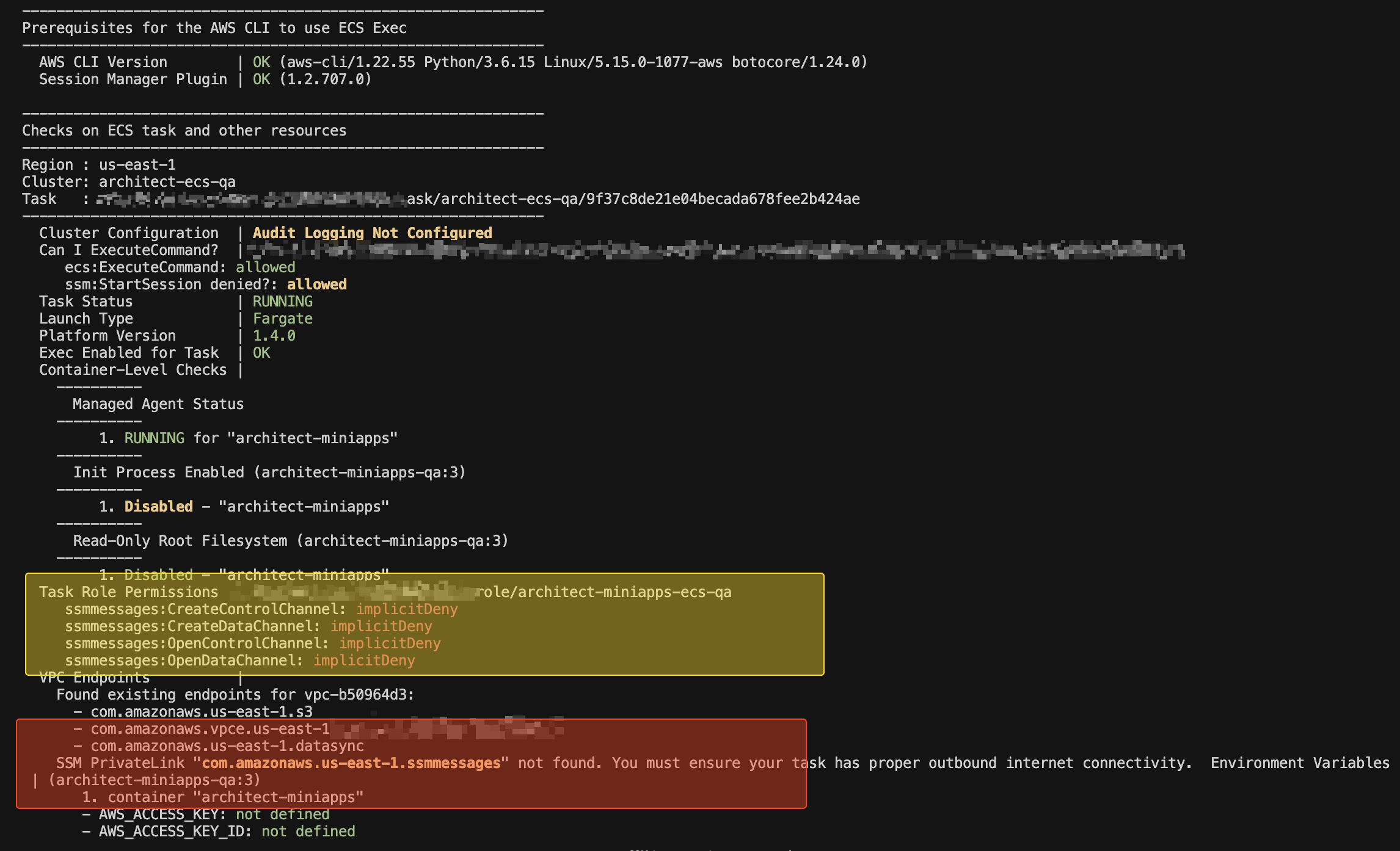

If ECS Exec is still not working, use the Amazon ECS Exec Checker tool to diagnose the issue.

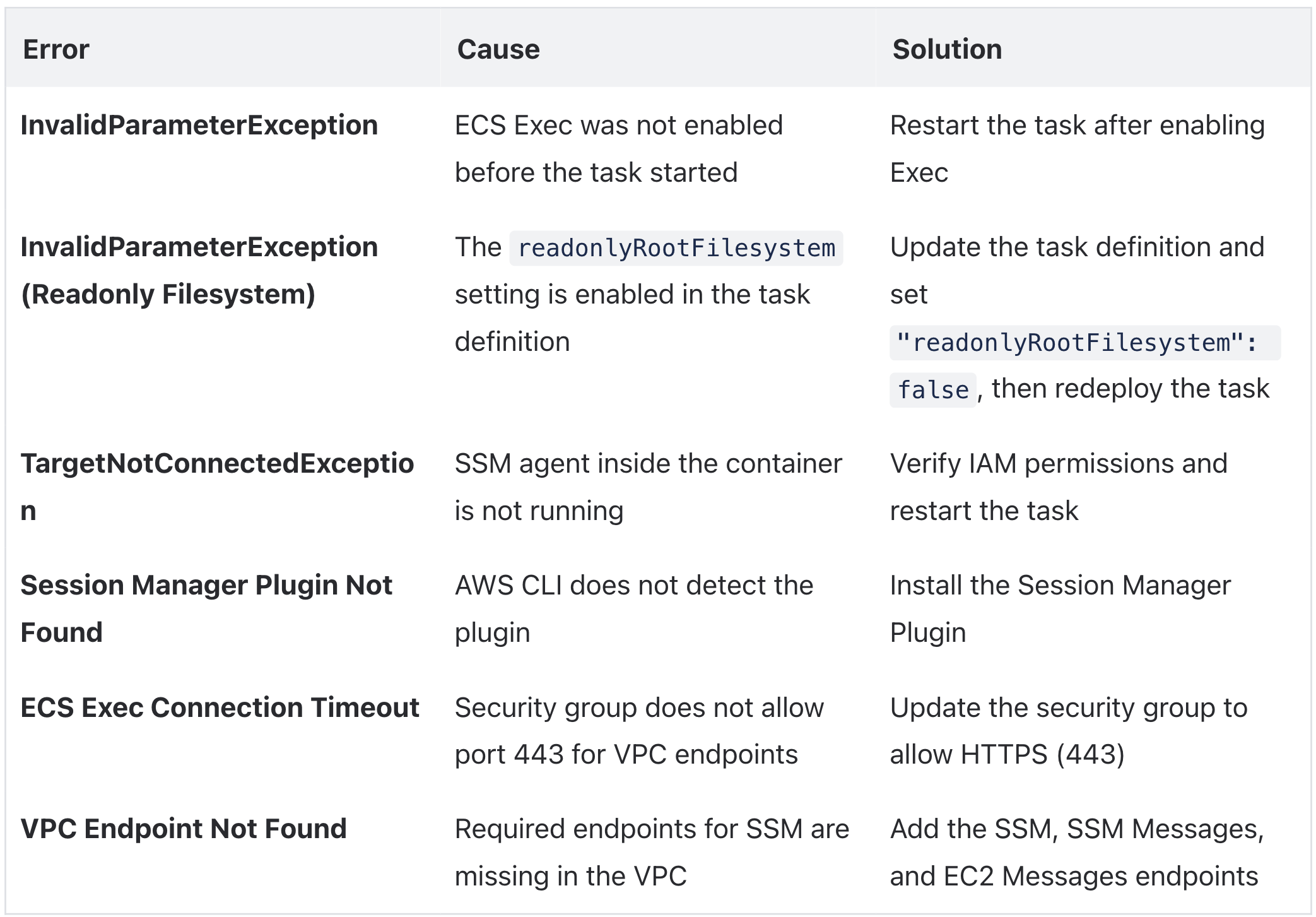

Step 6: Troubleshooting ECS Exec Issues

Clone the ECS Exec Checker Repository

git clone https://github.com/aws-containers/amazon-ecs-exec-checker.git

cd amazon-ecs-exec-checkerRun the ECS Exec Checker Script

chmod +x check-ecs-exec.sh

./check-ecs-exec.sh <your-cluster> <your-task-arn>Example:

./check-ecs-exec.sh architect-ecs-qa arn:aws:ecs:us-east-1:98700009786565:task/architect-ecs-qa/9f309986767jhhjghcgcjgjhb424aeThis script checks IAM permissions, ECS service configurations, and required dependencies. If any issue is found, the output will indicate what needs to be fixed.

From the screenshot, we can clearly see that the missing permissions are causing the issue.

The ECS task role (architect-miniapps-ecs-qa) does not have the required permissions for ssmmessages, specifically:

ssmmessages:CreateControlChannelssmmessages:CreateDataChannelssmmessages:OpenControlChannelssmmessages:OpenDataChannel

These permissions are currently implicitly denied, which means the ECS task is unable to establish a session using AWS Systems Manager (SSM).

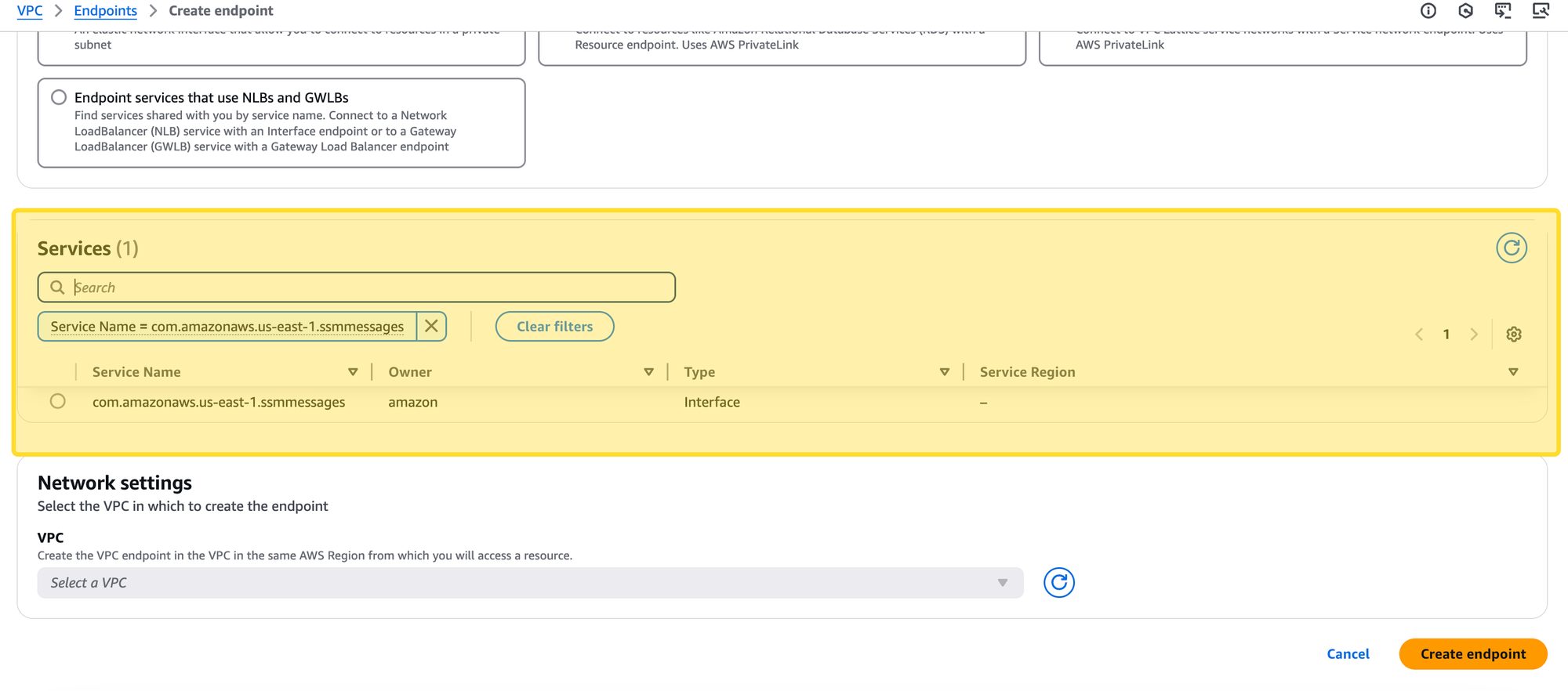

Additionally, since this task is running in a private subnet, it requires a VPC endpoint for SSM Messages (ssmmessages) to communicate with AWS services. However, as seen in the output, the required com.amazonaws.us-east-1.ssmmessages VPC endpoint is missing.

To fix this:

- Attach the necessary permissions to the ECS task role.

- Add the missing VPC endpoint (

com.amazonaws.us-east-1.ssmmessages) in the AWS VPC settings.

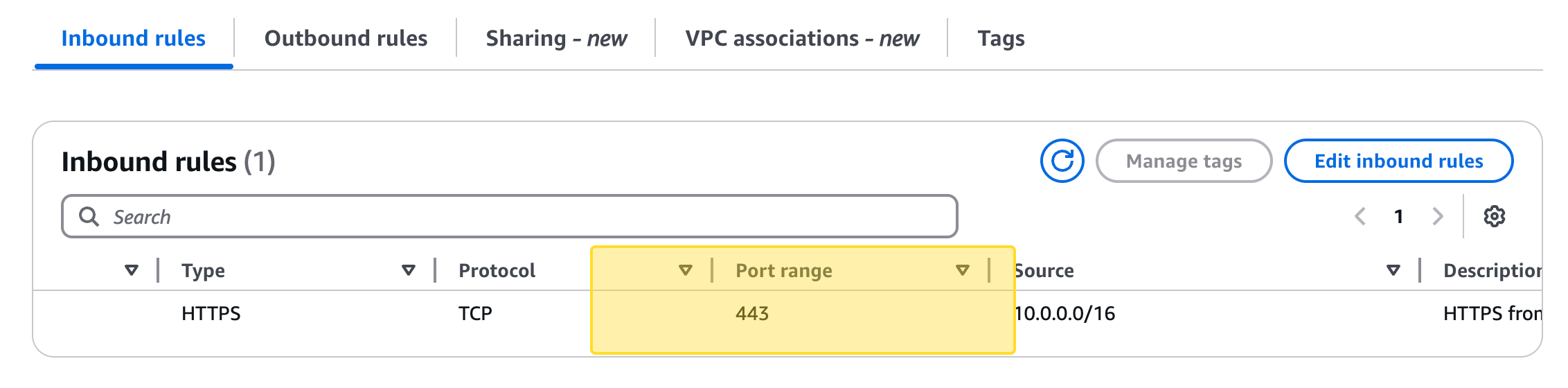

3. Update Security Group Rules for VPC Endpoints

The security group attached to the VPC endpoints must allow HTTPS (port 443) inbound from the ECS tasks' security group and all outbound traffic to the ECS tasks' security group.

Step 8: Automate Everything with Terraform

If you want to skip all the manual steps and set everything up in one go, you can use our Terraform configuration. It includes all the necessary IAM permissions, VPC endpoints, and ECS Exec-enabled services.

Here’s a demo terraform code that runs a service in a private subnet, and the required endpoints are added automatically. With this Terraform setup, you can apply the same configuration for all your future services without repeating these steps. If you already have a VPC in place you can easily understand what changes you might have to make in your own infra manually.

provider "aws" {

region = "us-east-1"

}

terraform {

required_version = "~> 1.0"

required_providers {

aws = {

source = "hashicorp/aws"

version = "~> 5.0"

}

}

}

data "aws_availability_zones" "available" {}

locals {

region = "us-east-1"

name = "kubenine-${basename(path.cwd)}"

vpc_cidr = "10.0.0.0/16"

azs = slice(data.aws_availability_zones.available.names, 0, 3)

container_name = "kubenine-container"

container_port = 80

vpc_cidr_block = var.vpc_id != "" ? data.aws_vpc.vpc.cidr_block : module.vpc[0].vpc_cidr_block

tags = {

Repository = "https://github.com/terraform-aws-modules/terraform-aws-ecs"

}

}

# Create a VPC with public and private subnets

# Private subnets do not have any internet access since NAT gateway is disabled

module "vpc" {

count = var.vpc_id == "" ? 1 : 0

source = "terraform-aws-modules/vpc/aws"

version = "~> 5.0"

name = local.name

cidr = local.vpc_cidr

azs = local.azs

private_subnets = [for k, v in local.azs : cidrsubnet(local.vpc_cidr, 4, k)]

public_subnets = [for k, v in local.azs : cidrsubnet(local.vpc_cidr, 8, k + 48)]

public_subnet_tags = { "Tier" = "Public" }

private_subnet_tags = { "Tier" = "Private" }

private_route_table_tags = { "Tier" = "Private-route" }

public_route_table_tags = { "Tier" = "Public-route" }

enable_nat_gateway = false

single_nat_gateway = false

enable_dns_hostnames = true

tags = local.tags

}

data "aws_vpc" "vpc" {

# If the VPC ID is not provided, a new VPC will be created

id = var.vpc_id != "" ? var.vpc_id : module.vpc[0].vpc_id

}

# Get private subnets in the vpc

data "aws_subnets" "private" {

# Add a filter to get only private subnets

filter {

name = "tag:Tier"

values = ["Private"]

}

# Add a filter to get only subnets in the VPC

filter {

name = "vpc-id"

values = [data.aws_vpc.vpc.id]

}

depends_on = [ module.vpc ]

}

data "aws_subnets" "public" {

filter {

name = "tag:Tier"

values = ["Public"]

}

filter {

name = "vpc-id"

values = [data.aws_vpc.vpc.id]

}

}

data "aws_route_tables" "private" {

vpc_id = data.aws_vpc.vpc.id

filter {

name = "tag:Tier"

values = ["Private-route"]

}

depends_on = [ module.vpc ]

}

output "private_route_tables" {

value = data.aws_route_tables.private

}

module "ecs" {

source = "terraform-aws-modules/ecs/aws"

cluster_name = "kubeops-ecs"

cluster_configuration = {

execute_command_configuration = {

logging = "OVERRIDE"

log_configuration = {

cloud_watch_log_group_name = "/aws/ecs/aws-ec2"

}

}

}

fargate_capacity_providers = {

FARGATE = {

# The default_capacity_provider_strategy is used to determine how tasks are placed

default_capacity_provider_strategy = {

weight = 50

}

}

# spot capacity provider is used to run tasks on Spot Instances

FARGATE_SPOT = {

default_capacity_provider_strategy = {

weight = 50

}

}

}

}

module "ecs_service" {

source = "terraform-aws-modules/ecs/aws//modules/service"

name = local.name

cluster_arn = module.ecs.cluster_arn

cpu = 512

memory = 1024

enable_execute_command = true

desired_count = 1

container_definitions = {

(local.container_name) = {

cpu = 512

memory = 1024

essential = true

readonly_root_filesystem = false

image = "905418054480.dkr.ecr.us-east-1.amazonaws.com/dummy-log-generator"

port_mappings = [

{

name = local.container_name

containerPort = local.container_port

hostPort = local.container_port

protocol = "tcp"

}

]

}

}

task_exec_iam_statements= [

{

actions = [

"ssmmessages:CreateControlChannel",

"ssmmessages:CreateDataChannel",

"ssmmessages:OpenControlChannel",

"ssmmessages:OpenDataChannel",

"ecs:ExecuteCommand",

"ssm:StartSession",

"ssm:GetConnectionStatus",

"ssm:DescribeSessions",

"logs:DescribeLogGroups",

"logs:CreateLogStream",

"logs:DescribeLogStreams",

"logs:PutLogEvents"

]

resources = ["*"]

}

]

tasks_iam_role_statements = [

{

actions = [

"ssmmessages:CreateControlChannel",

"ssmmessages:CreateDataChannel",

"ssmmessages:OpenControlChannel",

"ssmmessages:OpenDataChannel",

"ecs:ExecuteCommand",

"ssm:StartSession",

"ssm:GetConnectionStatus",

"ssm:DescribeSessions",

"logs:DescribeLogGroups",

"logs:CreateLogStream",

"logs:DescribeLogStreams",

"logs:PutLogEvents"

]

resources = ["*"]

}

]

subnet_ids = data.aws_subnets.private.ids

service_tags = {

"ServiceTag" = "Tag on service level"

}

tags = local.tags

}

# VPC Endpoints are needed because the the private subnet doesn't have internet connectivity

module "vpc_endpoints" {

source = "terraform-aws-modules/vpc/aws//modules/vpc-endpoints"

vpc_id = data.aws_vpc.vpc.id

create_security_group = true

security_group_name_prefix = "${local.name}-vpc-endpoints-"

security_group_description = "VPC endpoint security group"

security_group_rules = {

ingress_https = {

description = "HTTPS from VPC"

cidr_blocks = [data.aws_vpc.vpc.cidr_block]

}

egress_all ={

description = "all traffic"

cidr_blocks = [data.aws_vpc.vpc.cidr_block]

}

}

endpoints = {

s3 = {

service = "s3"

service_type = "Gateway"

route_table_ids = data.aws_route_tables.private.ids

policy = data.aws_iam_policy_document.generic_endpoint_policy.json

tags = { Name = "s3-api-vpc-endpoint" }

},

ecs_telemetry = {

service = "ecs-telemetry"

private_dns_enabled = true

subnet_ids = data.aws_subnets.private.ids

policy = data.aws_iam_policy_document.generic_endpoint_policy.json

tags = { Name = "ecs-telemetry-vpc-endpoint" }

},

ecr_api = {

service = "ecr.api"

private_dns_enabled = true

subnet_ids = data.aws_subnets.private.ids

policy = data.aws_iam_policy_document.generic_endpoint_policy.json

tags = { Name = "ecr-api-vpc-endpoint" }

},

ecr_dkr = {

service = "ecr.dkr"

private_dns_enabled = true

subnet_ids = data.aws_subnets.private.ids

policy = data.aws_iam_policy_document.generic_endpoint_policy.json

tags = { Name = "ecr-dkr-vpc-endpoint" }

},

logs = {

service = "logs"

private_dns_enabled = true

subnet_ids = data.aws_subnets.private.ids

policy = data.aws_iam_policy_document.generic_endpoint_policy.json

tags = { Name = "logs-vpc-endpoint" }

},

ssmmessages={

service = "ssmmessages"

private_dns_enabled = true

subnet_ids = data.aws_subnets.private.ids

policy = data.aws_iam_policy_document.generic_endpoint_policy.json

tags = { Name = "ssmmessages-vpc-endpoint" }

}

}

tags = merge(local.tags, {

Project = "Secret"

Endpoint = "true"

})

}

data "aws_iam_policy_document" "generic_endpoint_policy" {

statement {

effect = "Allow"

actions = ["*"]

resources = ["*"]

principals {

type = "*"

identifiers = ["*"]

}

}

}Add to the "Common Issues and Fixes" Table

At the end of the troubleshooting steps, add a small reminder:

If ECS Exec is still not working,

An error occurred (InvalidParameterException) when calling the ExecuteCommand operation: The execute command failed because execute command was not enabled when the task was run or the execute command agent isn’t running.check your ECS task definition. By default, readonlyRootFilesystem is set to true, which prevents write operations inside the container.

To fix this, update your task definition and change:

"readonlyRootFilesystem": true to:

"readonlyRootFilesystem": false

Then redeploy the task and retry ECS Exec.

Conclusion

By setting up the necessary IAM permissions, VPC endpoints, and security group rules, ECS Exec can work without issues.

If you run into any errors, check the Common Issues and Fixes section for quick solutions. With this setup, you can easily access and debug your ECS containers!