Table of Contents

Table of Contents

Introduction

Most deployment workflows need SSH access to production VMs. This creates security risks and adds operational complexity. You need to manage SSH keys across environments and expose your infrastructure to potential attacks.

Modern DevOps needs secure, automated deployment pipelines that work without direct server access. A lightweight deployment controller gives you zero-touch deployments while keeping security and efficiency.

This guide shows how to build a secure deployment system using a Flask controller that manages Docker Compose restarts through a REST API. The solution works with your existing CI/CD pipeline while keeping your production environment secure.

Step-by-Step Implementation

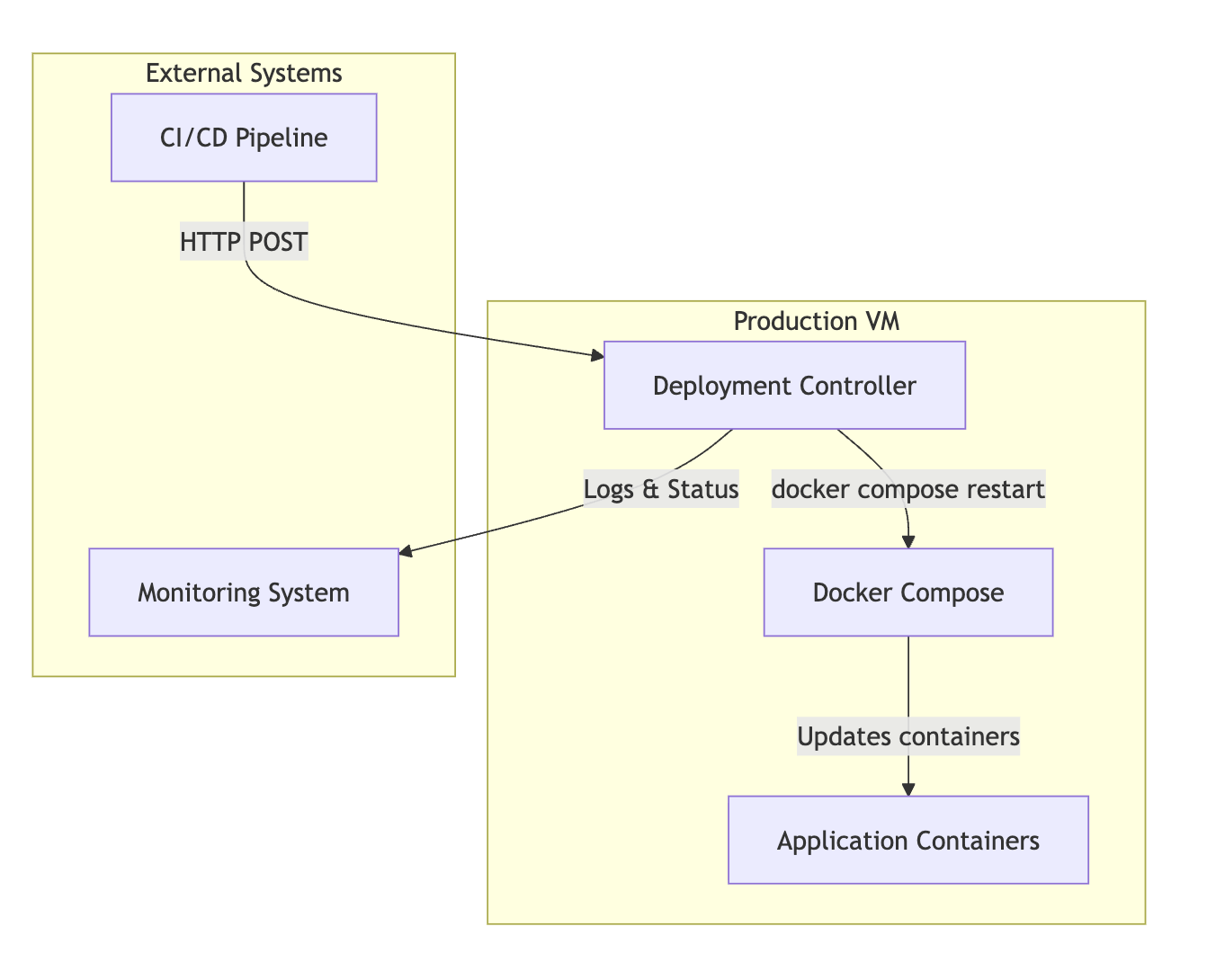

Architecture Overview

The solution consists of three main components:

- Deployment Controller: A lightweight Flask application that exposes REST endpoints

- Docker Compose: Manages your application containers

- CI/CD Pipeline: Triggers deployments through HTTP requests

1. Create the Deployment Controller

Create a new directory for your deployment controller:

mkdir deployment-controller

cd deployment-controllerCreate the Flask application (app.py):

from flask import Flask, request, jsonify

import subprocess

import logging

import os

from datetime import datetime

app = Flask(__name__)

# Configure logging

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s',

handlers=[

logging.FileHandler('deployment.log'),

logging.StreamHandler()

]

)

# Configuration

DOCKER_COMPOSE_FILE = os.getenv('DOCKER_COMPOSE_FILE', 'docker-compose.yml')

DEPLOYMENT_TOKEN = os.getenv('DEPLOYMENT_TOKEN', 'your-secure-token-here')

ALLOWED_IPS = os.getenv('ALLOWED_IPS', '').split(',') if os.getenv('ALLOWED_IPS') else []

def validate_request():

"""Validate the incoming request"""

# Check token

token = request.headers.get('Authorization', '').replace('Bearer ', '')

if token != DEPLOYMENT_TOKEN:

return False, "Invalid token"

# Check IP whitelist (optional)

if ALLOWED_IPS and request.remote_addr not in ALLOWED_IPS:

return False, "IP not allowed"

return True, "Valid request"

def run_docker_compose_command(command):

"""Execute docker compose command safely"""

try:

result = subprocess.run(

['docker', 'compose', '-f', DOCKER_COMPOSE_FILE] + command.split(),

capture_output=True,

text=True,

check=True,

cwd=os.path.dirname(os.path.abspath(DOCKER_COMPOSE_FILE))

)

return True, result.stdout

except subprocess.CalledProcessError as e:

return False, e.stderr

@app.route('/health', methods=['GET'])

def health_check():

"""Health check endpoint"""

return jsonify({

'status': 'healthy',

'timestamp': datetime.utcnow().isoformat(),

'service': 'deployment-controller'

})

@app.route('/deploy', methods=['POST'])

def deploy():

"""Deploy new images by restarting containers"""

# Validate request

is_valid, message = validate_request()

if not is_valid:

logging.warning(f"Invalid deployment request: {message}")

return jsonify({'error': message}), 401

# Get deployment details

data = request.get_json() or {}

service_name = data.get('service', '')

image_tag = data.get('image_tag', 'latest')

logging.info(f"Starting deployment for service: {service_name}, image: {image_tag}")

# Note: If using imagePullPolicy: Always, skip the pull step

# Docker will automatically pull latest images during restart

# Uncomment the following lines only if you need explicit pull control:

# success, output = run_docker_compose_command('pull')

# if not success:

# logging.error(f"Failed to pull images: {output}")

# return jsonify({'error': 'Failed to pull images', 'details': output}), 500

# Restart specific service or all services

if service_name:

restart_command = f'restart {service_name}'

else:

restart_command = 'restart'

success, output = run_docker_compose_command(restart_command)

if not success:

logging.error(f"Failed to restart containers: {output}")

return jsonify({'error': 'Failed to restart containers', 'details': output}), 500

logging.info(f"Deployment completed successfully for service: {service_name}")

return jsonify({

'status': 'success',

'message': 'Deployment completed',

'service': service_name,

'image_tag': image_tag,

'timestamp': datetime.utcnow().isoformat(),

'output': output

})

@app.route('/status', methods=['GET'])

def status():

"""Get current deployment status"""

is_valid, message = validate_request()

if not is_valid:

return jsonify({'error': message}), 401

success, output = run_docker_compose_command('ps')

if not success:

return jsonify({'error': 'Failed to get status', 'details': output}), 500

return jsonify({

'status': 'success',

'containers': output,

'timestamp': datetime.utcnow().isoformat()

})

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000, debug=False)2. Create Requirements and Configuration

Create requirements.txt:

Flask==2.3.3

gunicorn==21.2.0Create Dockerfile for the controller:

FROM python:3.11-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY app.py .

EXPOSE 5000

CMD ["gunicorn", "--bind", "0.0.0.0:5000", "app:app"]Create docker-compose.yml for the controller:

version: '3.8'

services:

deployment-controller:

build: .

ports:

- "5000:5000"

environment:

- DOCKER_COMPOSE_FILE=/app/docker-compose.yml

- DEPLOYMENT_TOKEN=${DEPLOYMENT_TOKEN}

- ALLOWED_IPS=${ALLOWED_IPS}

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./docker-compose.yml:/app/docker-compose.yml

restart: unless-stopped

networks:

- deployment-network

networks:

deployment-network:

driver: bridge3. Set Up Environment Variables

Create .env file:

# Deployment Controller Configuration

DEPLOYMENT_TOKEN=your-super-secure-token-here-change-this

ALLOWED_IPS=192.168.1.100,10.0.0.50 # Optional: restrict to specific IPs

# Your Application Configuration

DOCKER_COMPOSE_FILE=docker-compose.yml4. Deploy the Controller

# Start the deployment controller

docker compose up -d

# Check if it's running

curl http://localhost:5000/health5. Update Your Application's Docker Compose

Modify your main application's docker-compose.yml to include the deployment controller:

version: '3.8'

services:

# Your existing services

web:

image: your-registry/your-app:latest

ports:

- "80:80"

environment:

- NODE_ENV=production

restart: unless-stopped

api:

image: your-registry/your-api:latest

ports:

- "3000:3000"

environment:

- DATABASE_URL=postgresql://...

restart: unless-stopped

# Deployment Controller

deployment-controller:

build: ./deployment-controller

ports:

- "5000:5000"

environment:

- DOCKER_COMPOSE_FILE=/app/docker-compose.yml

- DEPLOYMENT_TOKEN=${DEPLOYMENT_TOKEN}

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- .:/app

restart: unless-stopped6. Image Pull Strategy

The deployment approach depends on your image pull policy:

Option A: Using imagePullPolicy: Always (Recommended)

# In your docker-compose.yml

services:

web:

image: your-registry/your-app:latest

imagePullPolicy: always # Docker automatically pulls latest images

restart: unless-stoppedWith this setup, Docker automatically pulls the latest image when restarting containers. No explicit docker compose pull needed.

Option B: Explicit Pull Control

# In your docker-compose.yml

services:

web:

image: your-registry/your-app:latest

imagePullPolicy: if-not-present # Only pull if image doesn't exist locally

restart: unless-stoppedWith this setup, uncomment the pull lines in the Flask controller to ensure you get the latest images.

7. Configure CI/CD Pipeline

GitHub Actions Example

Create .github/workflows/deploy.yml:

name: Deploy to Production

on:

push:

branches: [main]

workflow_dispatch:

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Deploy to Production

run: |

curl -X POST \

-H "Authorization: Bearer ${{ secrets.DEPLOYMENT_TOKEN }}" \

-H "Content-Type: application/json" \

-d '{"service": "web", "image_tag": "latest"}' \

https://your-production-vm.com:5000/deployGitLab CI Example

Create .gitlab-ci.yml:

deploy:

stage: deploy

script:

- |

curl -X POST \

-H "Authorization: Bearer $DEPLOYMENT_TOKEN" \

-H "Content-Type: application/json" \

-d '{"service": "web", "image_tag": "latest"}' \

https://your-production-vm.com:5000/deploy

only:

- main8. Security Considerations

Network Security

# Use nginx as reverse proxy with SSL

server {

listen 443 ssl;

server_name your-deployment-api.com;

ssl_certificate /path/to/cert.pem;

ssl_certificate_key /path/to/key.pem;

location /deploy {

proxy_pass http://localhost:5000;

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

# Additional security headers

add_header X-Frame-Options DENY;

add_header X-Content-Type-Options nosniff;

}

}Firewall Configuration

# Allow only HTTPS traffic to deployment endpoint

ufw allow 443/tcp

ufw deny 5000/tcp # Block direct access to Flask app9. Monitoring and Logging

Add monitoring to your deployment controller:

# Add to app.py

import requests

def send_notification(message, status):

"""Send deployment notification to monitoring system"""

webhook_url = os.getenv('WEBHOOK_URL')

if webhook_url:

payload = {

'text': f'Deployment {status}: {message}',

'timestamp': datetime.utcnow().isoformat()

}

try:

requests.post(webhook_url, json=payload, timeout=5)

except Exception as e:

logging.error(f"Failed to send notification: {e}")

# Update deploy endpoint

@app.route('/deploy', methods=['POST'])

def deploy():

# ... existing code ...

if success:

send_notification(f"Service {service_name} deployed successfully", "SUCCESS")

else:

send_notification(f"Service {service_name} deployment failed", "FAILED")

# ... rest of the code ...Conclusion

This deployment approach eliminates SSH dependencies while maintaining security and operational efficiency. The Flask-based controller provides a simple, secure interface for your CI/CD pipeline to trigger deployments.

Key benefits of this solution:

- Better Security: No SSH keys or direct server access needed

- Simple Operations: One HTTP endpoint for all deployments

- Complete Logging: Track all deployment activities

- Easy to Scale: Add more deployment strategies when needed

- CI/CD Ready: Works with your existing pipeline

The solution works for single services or complex multi-service setups. You can add features like blue-green deployments, rollback options, and monitoring integration.

For production, add security measures like IP whitelisting, request rate limiting, and monitoring to ensure reliable deployments.