Table of Contents

Introduction

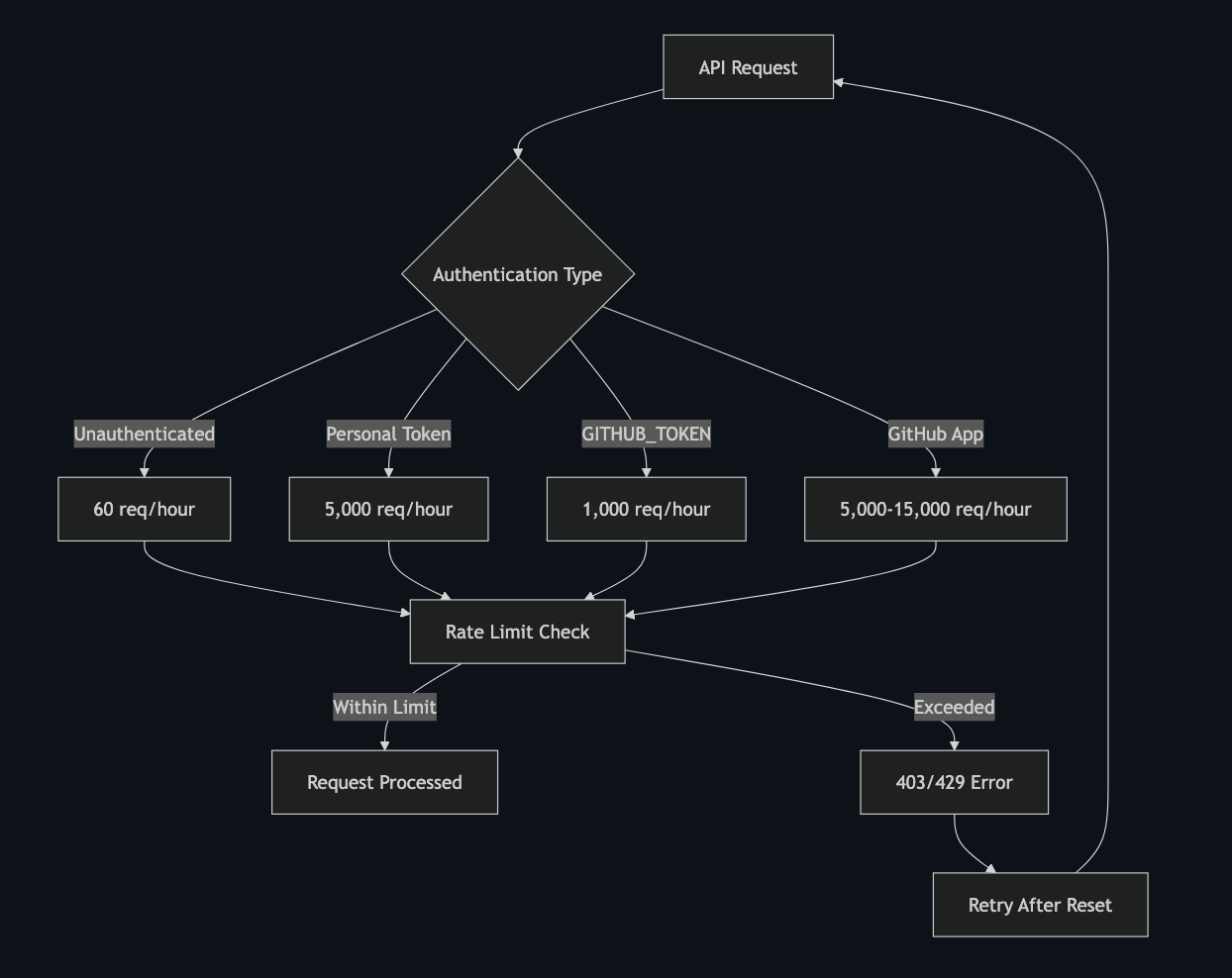

GitHub API rate limits can break your CI/CD pipelines and automation workflows. When your GitHub Actions fail with 403 or 429 errors, it's usually because you've hit the rate limit ceiling. Understanding these limits and implementing proper strategies can save hours of debugging and prevent deployment failures.

GitHub enforces two types of rate limits: primary limits based on authentication method and secondary limits to prevent abuse. The most common issue teams face is the 1,000 requests per hour limit for GITHUB_TOKEN in GitHub Actions, which can be easily exceeded in active repositories with multiple workflows.

This guide covers practical strategies to avoid rate limiting, from token management to retry patterns, helping you build resilient automation that scales with your development velocity.

Understanding GitHub Rate Limits

GitHub uses different rate limits based on your authentication method:

- Unauthenticated requests: 60 requests/hour per IP

- Personal Access Tokens: 5,000 requests/hour

- GitHub Actions GITHUB_TOKEN: 1,000 requests/hour per repository

- GitHub Apps: 5,000-15,000 requests/hour (scales with repos/users)

Secondary limits include:

- Maximum 100 concurrent requests

- 900 points per minute for REST API endpoints

- 80 content-generating requests per minute

Token Management Strategies

Use GitHub Apps Instead of Personal Tokens

GitHub Apps provide higher rate limits and better security:

# .github/workflows/deploy.yml

name: Deploy with GitHub App

on:

push:

branches: [main]

jobs:

deploy:

runs-on: ubuntu-latest

steps:

- name: Checkout

uses: actions/checkout@v4

with:

token: ${{ secrets.GITHUB_APP_TOKEN }}

- name: Deploy

run: |

# Your deployment logic hereImplement Token Rotation

Rotate tokens regularly to avoid hitting limits:

#!/bin/bash

# token-rotation.sh

OLD_TOKEN=$1

NEW_TOKEN=$2

# Update secrets in repository

gh secret set GITHUB_TOKEN --body "$NEW_TOKEN"Use Repository-Specific Tokens

Create separate tokens for different repositories to distribute the load:

# Different tokens for different purposes

env:

DEPLOY_TOKEN: ${{ secrets.DEPLOY_TOKEN }}

NOTIFY_TOKEN: ${{ secrets.NOTIFY_TOKEN }}

BACKUP_TOKEN: ${{ secrets.BACKUP_TOKEN }}Rate Limit Handling in Code

Implement Exponential Backoff

import time

import random

from functools import wraps

def retry_with_backoff(max_retries=3, base_delay=1):

def decorator(func):

@wraps(func)

def wrapper(*args, **kwargs):

for attempt in range(max_retries):

try:

return func(*args, **kwargs)

except Exception as e:

if "rate limit" in str(e).lower() and attempt < max_retries - 1:

delay = base_delay * (2 ** attempt) + random.uniform(0, 1)

time.sleep(delay)

continue

raise

return None

return wrapper

return decorator

@retry_with_backoff(max_retries=5, base_delay=2)

def make_github_request(url, headers):

response = requests.get(url, headers=headers)

if response.status_code == 429:

retry_after = int(response.headers.get('Retry-After', 60))

time.sleep(retry_after)

raise Exception("Rate limited")

return responseCheck Rate Limit Headers

Always monitor rate limit headers in your requests:

def check_rate_limit(response):

remaining = int(response.headers.get('X-RateLimit-Remaining', 0))

reset_time = int(response.headers.get('X-RateLimit-Reset', 0))

if remaining < 100: # Warning threshold

print(f"Warning: Only {remaining} requests remaining")

print(f"Rate limit resets at: {reset_time}")

return remaining, reset_timeCI/CD Pipeline Optimization

Batch API Requests

Combine multiple operations into single requests:

# Instead of multiple individual requests

- name: Get PR details

run: |

# Bad: Multiple API calls

gh pr view ${{ github.event.pull_request.number }} --json title

gh pr view ${{ github.event.pull_request.number }} --json body

gh pr view ${{ github.event.pull_request.number }} --json files

# Good: Single API call

gh pr view ${{ github.event.pull_request.number }} --json title,body,filesCache API Responses

Use GitHub Actions cache to reduce API calls:

- name: Cache API response

uses: actions/cache@v3

with:

path: ~/.cache/github-api

key: ${{ runner.os }}-api-cache-${{ github.sha }}

restore-keys: |

${{ runner.os }}-api-cache-

- name: Use cached data

run: |

if [ -f ~/.cache/github-api/data.json ]; then

echo "Using cached data"

else

echo "Fetching fresh data"

gh api repos/${{ github.repository }}/commits > ~/.cache/github-api/data.json

fiOptimize Workflow Triggers

Reduce unnecessary workflow runs:

# Only run on specific paths

on:

push:

branches: [main]

paths:

- 'src/**'

- 'package.json'

- '.github/workflows/**'

# Skip workflows for draft PRs

- name: Skip for draft PRs

if: github.event.pull_request.draft == true

run: exit 0Monitoring and Alerting

Set Up Rate Limit Monitoring

- name: Monitor rate limits

run: |

response=$(gh api rate_limit)

remaining=$(echo $response | jq '.rate.remaining')

if [ $remaining -lt 100 ]; then

echo "::warning::Rate limit low: $remaining requests remaining"

fiCreate Rate Limit Dashboard

import requests

import json

from datetime import datetime

def monitor_rate_limits(token):

headers = {'Authorization': f'token {token}'}

response = requests.get('https://api.github.com/rate_limit', headers=headers)

data = response.json()

rate = data['rate']

print(f"Remaining: {rate['remaining']}")

print(f"Reset time: {datetime.fromtimestamp(rate['reset'])}")

if rate['remaining'] < 100:

# Send alert to Slack/Teams

send_alert(f"GitHub rate limit low: {rate['remaining']} remaining")Best Practices Summary

- Use GitHub Apps for higher rate limits and better security

- Implement exponential backoff in all API calls

- Monitor rate limit headers in your applications

- Cache API responses to reduce redundant requests

- Batch multiple operations into single API calls

- Optimize workflow triggers to avoid unnecessary runs

- Set up monitoring to get early warnings

- Rotate tokens regularly to distribute load

Conclusion

GitHub rate limiting is a common issue that can break your automation, but it's entirely avoidable with proper planning. The key is understanding your usage patterns and implementing the right strategies from the start.

Focus on using GitHub Apps for higher limits, implementing proper retry logic, and monitoring your usage. These simple changes can prevent hours of debugging and keep your CI/CD pipelines running smoothly.

Remember: rate limits exist to keep the API stable for everyone. By following these practices, you're not just avoiding limits—you're building more resilient and efficient automation.